An update (Patreon)

Content

It has been a couple of weeks since the last build and I've been a bit quiet so here is an update. When I am quiet like this it just means I am busy programming. To me the most important thing is to keep programming every day because there is still so much to.

I am trying to use my time as efficiently as possible and sometimes that means staying focused on one thing and not swapping between different tasks, and not fixing bugs in parts of the code that I know are going to be rewritten, so some bugs are going unfixed for now but will get cleared up eventually.

macOS trackpad support

I haven't started it yet. It is one of many things to do but I am just mentioning it here because it turns out many macOS users absolutely love their trackpads and so they are vocal about Blockhead's terrible trackpad support. I was also sent a trackpad in the mail by a very nice and handsome man (thank you Samir) so I am ready to go as soon as I get around to it.

In blockhead when you zoom in and out it does it in steps, mapping to the stepped events that mouse wheels generate. The waveform rendering system takes advantage of this to optimize things - If the waveform of a block is fully rendered at a certain zoom level then Blockhead just caches the image and reuses it whenever that block is viewed at that exact zoom level. The cache is only dirtied when the block's waveform changes.

One thing I have noticed about trackpads in general on macOS is that when you pinch to zoom in/out then generally this happens smoothly, directly mapping to your finger movement. This runs counter to how zooming is implemented in Blockhead because instead of having zoom levels 1, 2, 3, 4, 5, etc... we would have zoom levels like 3.46549231 or whatever. This breaks the waveform caching system and raises some questions:

- Should we now render the waveform twice, once at zoom:3 and once at zoom:4 and then interpolate between them to generate the final result?

- Should I just keep stepped zooming even when using a trackpad? I would probably try to add some kind of visualizer that appears while pinching which shows where the stepping points are.

- Is the caching system even needed or can it be implemented in a better way?

The waveform rendering system in its current form does have some issues, and a couple mysterious bugs that are so rare that I never figured them out. So I am planning to rewrite that stuff eventually, at which point I will likely investigate if smooth zooming is feasible (bear in mind Blockhead does a lot more real-time waveform updating than a typical audio program so just because you have seen smooth waveform zooming somewhere else, doesn't mean it is trivial to achieve in Blockhead.)

What I'm working on now

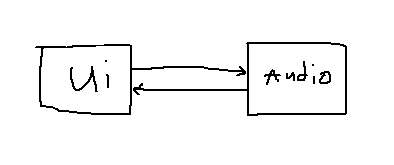

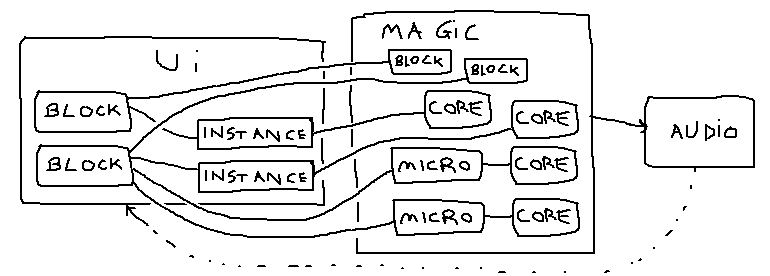

Up until now Blockhead's basic threading architecture has been something like this:

This is a pretty sensible and typical way of doing things in an audio application.

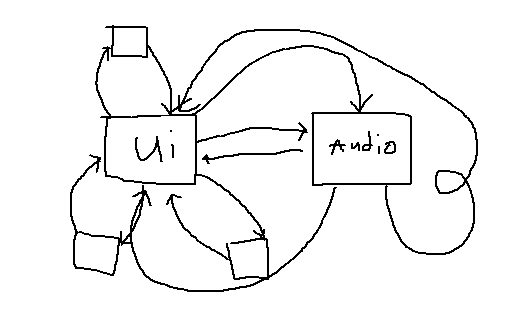

The diagram is very simplified because in Blockhead there's a bunch of other threads flying around too

- all sample loading happens in another thread

- bouncing and baking happens in another thread

- some of the manipulator processing happens in another thread

There is also not one basic channel of communication between the UI and Audio threads as the diagram might lead you to believe.

So the reality is probably something more like this:

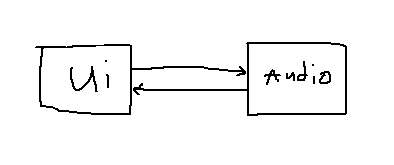

In the context of what I'm working on right now though, the first diagram is more useful, because Blockhead is at the point now where simply having the UI and Audio thread interact with one another is unsuitable. So basically what I'm doing is going from this:

...to this:

I am introducing an intermediate processing thread that sits in between the UI and Audio systems. They key point is that the UI no longer sends data directly to the Audio system. Instead it's going to be doing everything by passing messages to this intermediate system which will perform the work of generating data to be passed on to the Audio system.

The intermediate thread can communicate data back to the UI. The Audio thread can too but I represented this with a dotted line because the data being sent back directly from Audio to UI is very simple and limited (with a few exceptions it's mainly just things like output peaks, so that the UI can animate the level meters.)

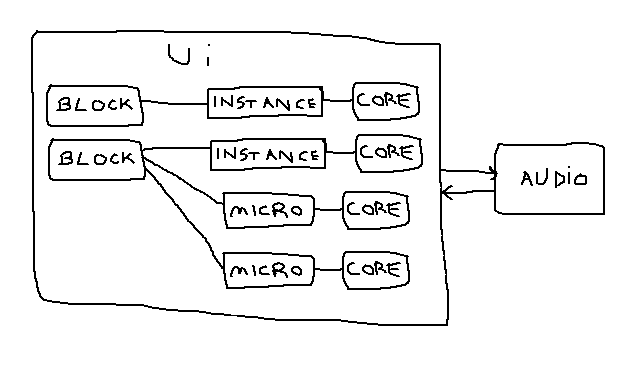

Going back to the original diagram, we can add more detail to the UI box to represent a project containing one workspace, with one track, with one lane, with one block, and two instances of the block:

As you can see, the audio system has no concept of "cloning". By the time the data gets to the audio thread those block instances just look like individual blocks with their own data. I lied to you though, the diagram actually looks like this:

What's the point of these "CORE" things? In this situation they look pointless, but they're required when you consider the intersection of several Blockhead features: "macros", "cloning", and "manipulators".

With a single instance of a macro which contains one track with one lane with one block instance, the diagram gets much more complicated:

In very simplified terms, the BLOCK INSTANCE inside the MACRO CORE is the data that the user is interacting with when they open up a macro and edit the block inside. When they play back the internal macro workspace, the BLOCK INSTANCE inside the MACRO CORE is what they are hearing.

When the user goes back up the macro hierarchy to the top-level workspace that contains the macro instance, what they are hearing when the project plays back is actually the MICRO BLOCK INSTANCE, which is an invisible version of the block instance which replicates any changes to the editable block instance. (Again this description is way too simple because it is completely disregarding the existence of the baking system, which will bake the entire macro instance to a sample when it's not being edited. So actually the user will just hear the baked macro when the project plays back.)

"Micro" is just the word i use internally for these invisible versions of objects that get generated whenever macros are in play. Since Micros have their own Cores where all their actual data lives, they can have manipulators applied to them individually.

Now if we create a second instance of the same macro block, and put it at the same level in the macro hierarchy, the diagram looks something like this:

And if Patreon is still doing that thing where it shrinks images down when they're too big then i guess this will be unreadable.

I am not going to bother drawing any diagrams to show what happens when you put macros inside macros because I would be here all day. And the only reason I started drawing these is to try to illustrate something more high-level. So let's remove some details from that final diagram (and forget about lanes, tracks, and workspaces):

The point I wanted to make was, when it comes to moving things from the UI into this new intermediate layer which will now sit in between the UI and Audio, I have to make a decision about where to draw this new boundary defining what is UI and what isn't.

The only data that really needs to stay in the UI thread is the objects that the user is interacting with, and that is "Blocks" and "Block instances". All this "micro" and "core" bullshit is invisible to the user so ideally the architecture I am aiming for is this:

The intermediate thread needs to know about blocks and block instances. Internally it generates cores and micro cores. Block and core data is passed on to the audio thread. At this point the "Block" and "Block instance" objects in the UI are basically just concerned with input processing and visuals and that's it.

There is only one place where the UI actually does need to know about micros, and that is the breadcrumb trail navigation thing that appears at the bottom when you have nested workspaces. Since the user can enter the same macro instance from multiple paths, the UI needs to know exactly where it is in the macro hierarchy to generate that breadcrumb trail. I think it could be generated in a different way though (by keeping track of where the user is coming from at each step when they traverse the macro hierarchy), and if all else fails then it is not such a disaster for the UI to query the MAGIC layer for the information it needs.

So this diagram is probably where I want to get to eventually. For now though, this is far too big a change. So what I'm doing right now looks more like this:

Micros and cores are still generated in the UI thread for now. The intermediate thread takes in Block data and Core data and generates a model to pass on to the audio thread. This is all pretty much set up now so I'm just working on the details.

What does this magic intermediate thread actually do? The whole point is to move a bunch of expensive processing out of the UI and this is mainly to do with maintaining the baking graph (a dependency graph between cores which is used by the baking thread to figure out what needs to be baked and when), and the manipulation graph (a graph connecting manipulators to target parameters which is used to figure out when core parameter data needs to be regenerated.)

- Baking operations already happen in their own thread so the magic thread will now communicate with the baking thread to let it know what to do (so the UI thread doesn't need to interact with the baking system anymore.) This involves detecting when cores have moved in relation to the dependant and dependency cores, and when their parameters have changed.

- Once the manipulation graph is up to date, (which can be an expensive operation in itself if there are many nested macros involved), the magic thread will also now deal with applying manipulators to their target parameters.

- The magic thread is now also responsible for detecting feedback loops whenever send/receive buses are present. This is another non-trivial operation which was being done in the UI thread. It's necessary to turn off baking for any cores involved in a feedback loop. The only thing the UI needs to do is display a background pattern on the blocks inside a loop so the magic thread will transmit this information back to the UI.

- Once the magic thread's own internal representation of the system is up to date, it will perform the appropriate operations to update the audio model and pass it on to the audio thread. (The audio model is a stripped down representation of the system containing only the data that is required for audio processing, with all the parameter data pre-manipulated and ready to go, and everything formatted in a way that is efficient for the audio thread to deal with.)

I won't go into detail on baking, manipulators, and snapshots, because I have already drawn too many diagrams and I already wrote about those systems in previous posts, but I am taking the opportunity to pretty much rewrite the baking system, and the snapshot system too. The baking system has always been a bit of a mess, and the snapshot system is overly complex for what it needs to be. Creating this intermediate processing thread is not just about the fact that it's a separate thread - it's also an abstraction layer between the UI and Audio systems, and what I am doing should actually simplify things a lot and create clearer boundaries between these different systems leading to less spaghetti going forward.